Just think, what is the total processing power of all smartphones in the world? This is a huge computing resource that can even emulate even the work of the human brain. You can not have such a resource idle without action, stupidly burning kilowatts of energy on chaticas and tapes of social networks. If you give these computing resources to a single distributed world AI, and even provide it with data from custom smartphones – for training – then such a system can make a qualitative leap in this area.

Standard methods of machine learning require that the data set for learning the model (“primary”) be collected in one place – on one computer, server or in one data center or cloud. Hence, it is taken away by the model that is being trained on this data. In the case of the cluster of computers in the data center, the stochastic gradient method (Stochastic Gradient Descent, SGD) is used, an optimization algorithm that constantly goes through parts of a set of data homogeneously distributed across servers in the cloud.

Google, Apple, Facebook, Microsoft and the rest of the AI players have been doing this for a long time: they collect data – sometimes confidential – from computers and smartphones of users into a single (supposedly) protected storage where they train their neural networks.

Now scientists from Google Research Pre They provided an interesting addition to this standard method of machine learning. They proposed an innovative approach called Federated Learning. It allows all devices that participate in machine learning to share a single model for forecasting at all, but do not share primary data for model training !

Such an unusual approach, perhaps, Reduces the effectiveness of machine learning (although this is not a fact), but it significantly reduces Google’s costs for maintaining data centers. Why should companies invest huge amounts in their equipment if it has billions of Android devices all over the world that can share the load among themselves? Users can be happy with this load, because they thereby help to make better the services that they themselves use. And they also protect their confidential data without sending them to the data center.

Google emphasizes that in this case it is not just that the already trained model runs directly on the user’s device, as it happens in services Mobile Vision API and On-Device Smart Reply. No, it is the training of the model is carried out on the final devices.

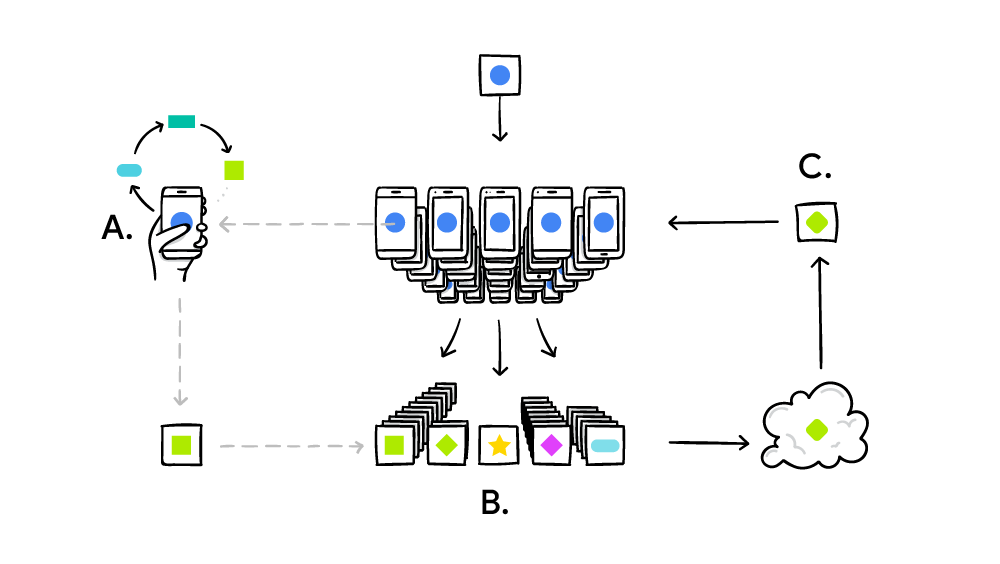

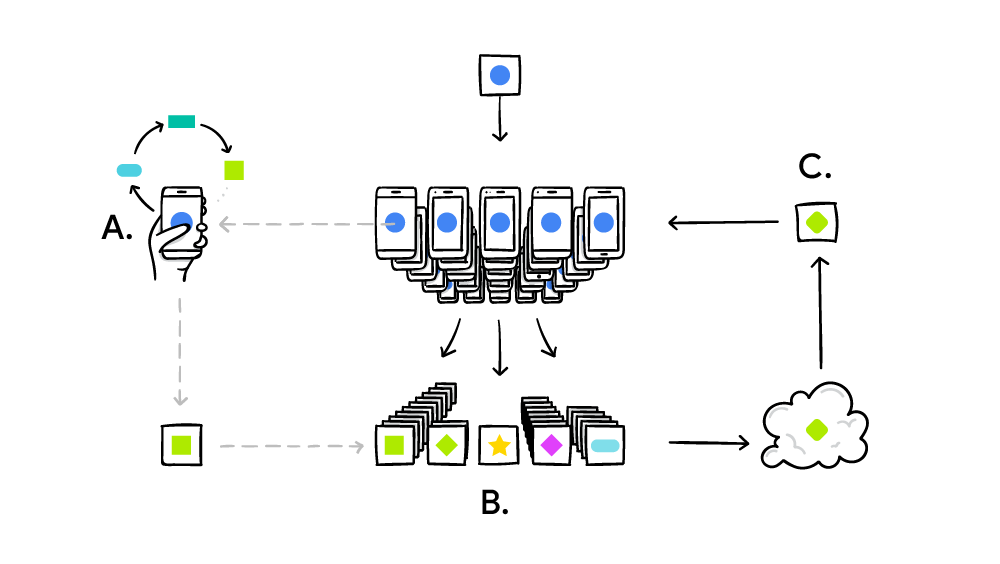

The federative training system operates according to the standard principle of distributed computing such as SETI @ Home, when millions of computers solve one big complex problem. In the case of SETI @ Home, this was the search for anomalies in a radio signal from space across the entire spectrum width. And in the case of federative machine learning Google is the improvement of a single common model (yet) of a weak AI. In practice, the training cycle is implemented as follows:

- the smartphone downloads the current model;

- with the help of a mini version of TensorFlow carries out a learning cycle on the unique data of a particular user;

- improves the model;

- calculates the difference between the improved source model, makes a patch using the Secure Aggregation crypto protocol, which allows decryption of data only if there are hundreds or thousands of patches from other users;

- sends a patch to the central server;

- the accepted patch is immediately averaged with thousands of patches received from other participants in the experiment, according to the algorithm of federative averaging;

- a new version of the model is rolled out;

- an improved model is sent to the participants of the experiment.

Federated averaging is very similar to the above stochastic gradient method, only here the initial calculations do not occur on servers in the cloud, but on millions of remote smartphones. The main achievements of federated averaging are 10-100 times less traffic with clients than traffic with servers using the stochastic gradient method. Optimization is achieved due to high-quality compression of updates, which are sent from smartphones to the server. Well, the cryptographic protocol Secure Aggregation is used here.

Google promises that the smartphone will perform calculations for the distributed world AI system only in times of downtime, so this will not affect performance in any way. Moreover, you can set the operating time only for the time when the smartphone is connected to the mains. Thus, these calculations do not even affect the battery life. At the moment, federated machine learning is already tested on contextual prompts in the Google keyboard – Gboard on Android.

The Federated Averaging algorithm is described in more detail in the scientific paper Communication-Efficient Learning of Deep Networks from Decentralized Data which was published on February 17, 2016 on arXiv.org (arXiv: 1602.05629).