photos

One photo is very difficult to automatically determine which parts of the image – this is the original photo, and which – the highlight. Moreover, the flare can light sections of the photo, because of which it will be impossible to restore that part of it that is hidden under the flare. But if you take a few pictures of the photo, moving the camera, the location of the highlight changes – it moves to different parts of the photo. In most cases, each individual pixel will not be under a glare at least on one of the pictures. And although none of the pictures will not be glossy, we can combine several pictures of the printed photo taken at different angles, and thus remove the highlight. The difficulty is that the images need to be very accurately combined so that they are correctly combined and this processing should be performed on the smartphone fast enough to get the effect of an almost instantaneous result.

Our technology Was inspired by our previous work, published on SIGGRAPH 2015, which we called “photographing without interference” [obstruction-free photography]. It uses similar principles to remove various interference from the field of view. But the original algorithm was based on the generating model, in which the movement and appearance of the main scene and interference were estimated. The capabilities of this model are great, and it is capable of removing a wide variety of interference – but it is too expensive from a computational point of view, so that it can be used on smartphones. Therefore, we developed a simplified model relating to glare as an anomaly, and trying to recognize the underlying image. And although the model is simplified, it is still a very difficult task – recognition must be accurate and reliable.

How it works

We start with a set of photos taken by the user moving the camera. The first picture – the “reference frame” – determines the desired final form of the image. Then the user is recommended to take four additional pictures. In each frame, we define the key points (calculate the properties of the ORB at the corners determined by the Harris algorithm) and use them to determine the homographs that map each subsequent frame to the reference one.

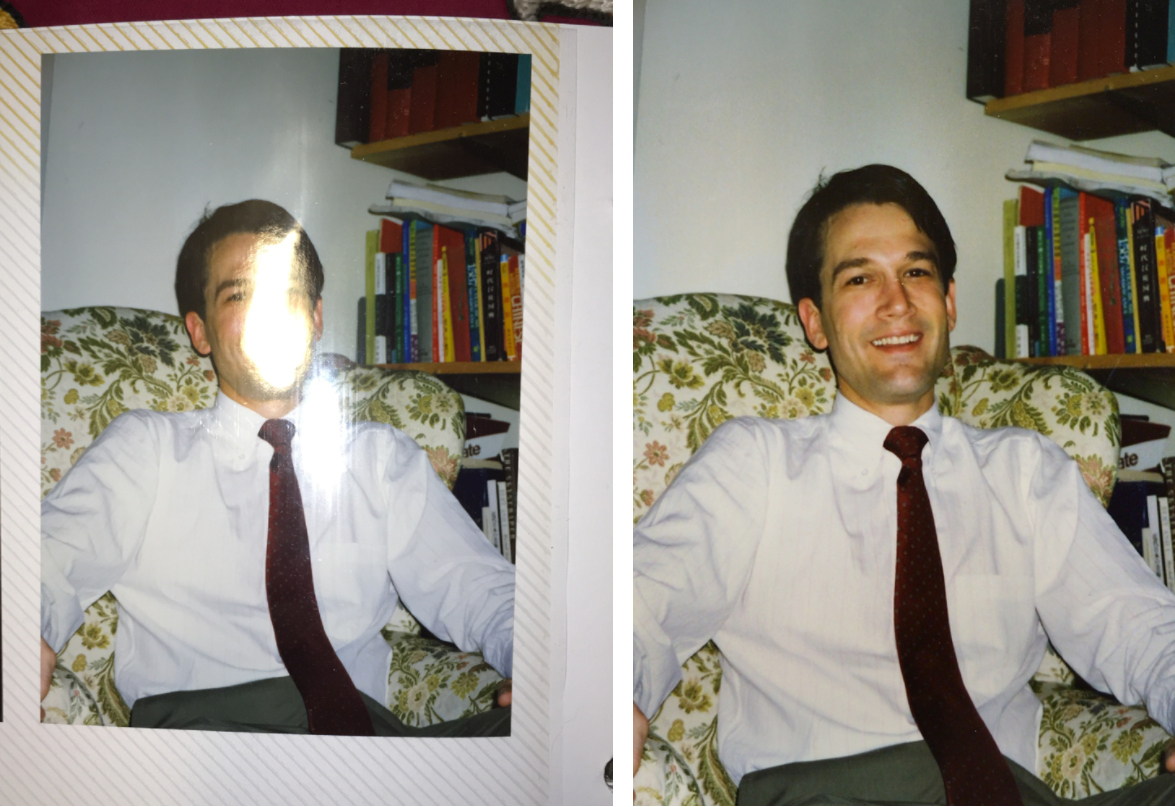

The technology seems straightforward, But it has a trick – homographs can only compare flat images. But paper photographs are often not flat (as in the example above). We use the optical flow – a fundamental representation of the movement in computer vision, which establishes a pixel-by-pixel correspondence between the two images, correcting the deviations from the plane. We start with frames aligned by homography, and we calculate the “stream fields” for straightening the images and further adjustments. Notice how, in the example below, the corners of the left photo move slightly after determining the frames with the help of homography alone. On the right you can see how the photo aligned better after applying the optical flow.

The difference is not very noticeable, but it has a significant effect on the final result. Note how small inconsistencies manifest themselves in the form of duplicated fragments of the image, and how these flaws are eliminated by further refinement with the help of streams.

And in this case, To force the initially slow-working optical flow algorithm to run faster on the smartphone. Instead of the traditional calculation of the flow for each pixel (the number of vectors is equal to the number of pixels), we construct a stream field with fewer control points, and record the motion of each pixel in the image as a function of the movement of control points. Namely, we divide each image into disjoint cells forming a coarse-grained lattice and represent the pixel flux in a separate cell as a bilinear combination of the flow and the four corners of the cell containing it.

Then it remains to solve a simpler problem, since the number of flow vectors is now equal to the number of lattice points, which are usually much smaller than pixels. This process is similar to processing images using splines. With this algorithm, we were able to reduce the calculation time of the optical flow on the Pixel phone by approximately 40 times!

Finally, to create a final image without glare, for each section of the frame, we take the pixel values And calculate the “soft minimum” to find the darkest of the options. Specifically, we calculate the expected minimum brightness from the available frames, and assign smaller weights to the pixels closer to the curved edges of the images. We use this method instead of directly calculating the minimum, because the brightness of the same pixels in different frames may differ. The pixel minimum can lead to the appearance of visible joints due to sudden changes in intensity at the boundaries of overlapping images.

The algorithm is able to work under various scanning conditions – matte and glossy photos, photos in albums And without them, the covers of magazines.

To calculate the final result, our team developed a method, automatically It defines the boundaries of the image and leads to a rectangular view. Due to distortions in perspective, the scanned rectangular photo is usually converted into a nonrectangular quadrilateral. The method analyzes image signals, color, edges to find out where the exact boundaries of the original photo go, and then applies geometric transformations to straighten the image. The result is a high-quality digital version of a paper photo without glare.

In general, a lot of things happen under the hood, and all this almost instantly works on your phone! You can try PhotoScan by downloading the version of the application for Android or iOS.